HCI and User Experience Research

“Poets say science takes away from the beauty of the stars - mere globs of gas atoms. I too can see the stars on a desert night, and feel them. But do I see less or more?”

Richard Feynman,1988

Sense of Place in Dematerialized World

Summary

The video you’re watching captures participant feedback on the VR environment they experienced during the study. This experiment was designed to address one central question: What are the criteria for creating a true sense of place in a virtual environment?

In the following section, you can explore the research methods and data analysis; blending user feedback, behavioral observation, and physiological measurements, to uncover the sensory and psychological cues that shape presence and trust in virtual worlds.

-

How can a virtual, dematerialized environment evoke the same emotional and cognitive connection as a physical place? This project set out to uncover the criteria that create a true sense of place in virtual worlds, bridging architecture, human psychology, trust, and neuroscience. The goal was to design an immersive experience that could capture not just the look, but the feeling of being somewhere real.

-

Using a mixed-methods research approach, I designed a custom VR environment modeled after the real-world acoustics of an existing location. The study involved:

Usability Testing & Behavioral Observation: 11 participants navigated the VR space to locate a specific sound source, encouraging exploration and engagement.

Physiological Data Collection: Captured GSR (Galvanic Skin Response) and HRV (Heart Rate Variability) to quantify trust levels and cognitive load.

Quantitative Analysis: Applied gradient-based GSR signal processing with Fast Fourier Transform (FFT) and Welch Power Spectral Density (WPSD) to identify frequency patterns across experimental conditions.

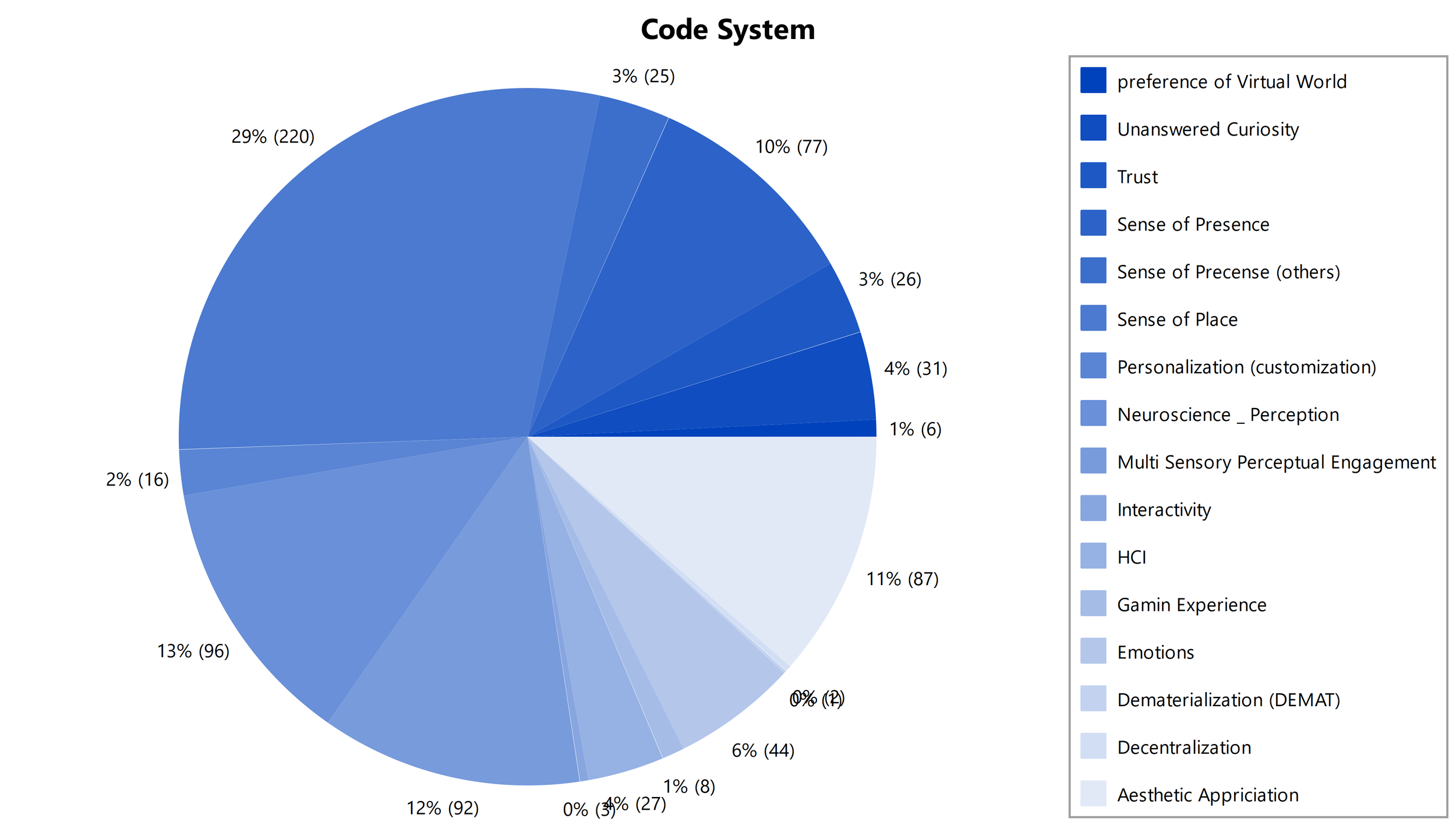

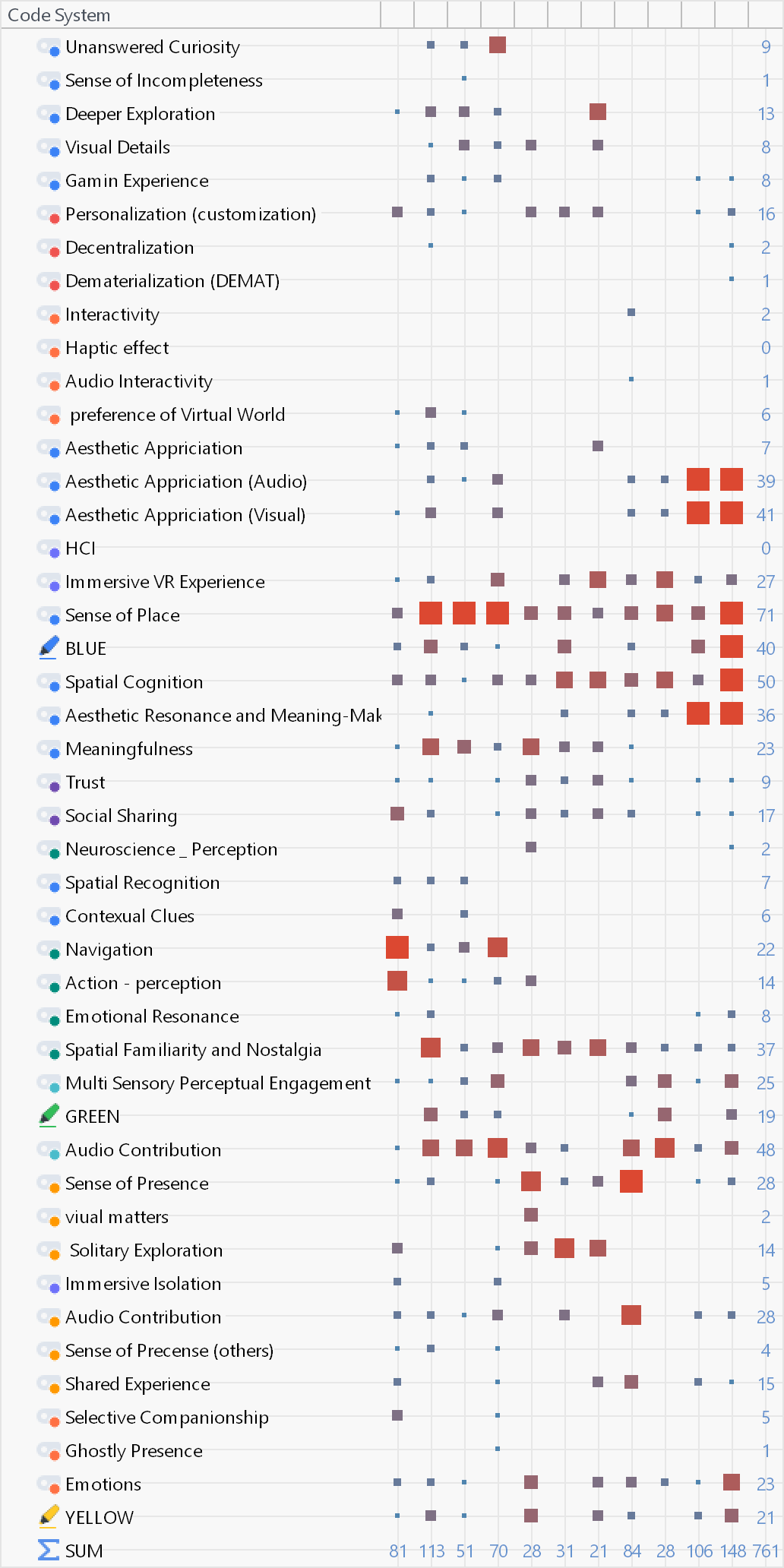

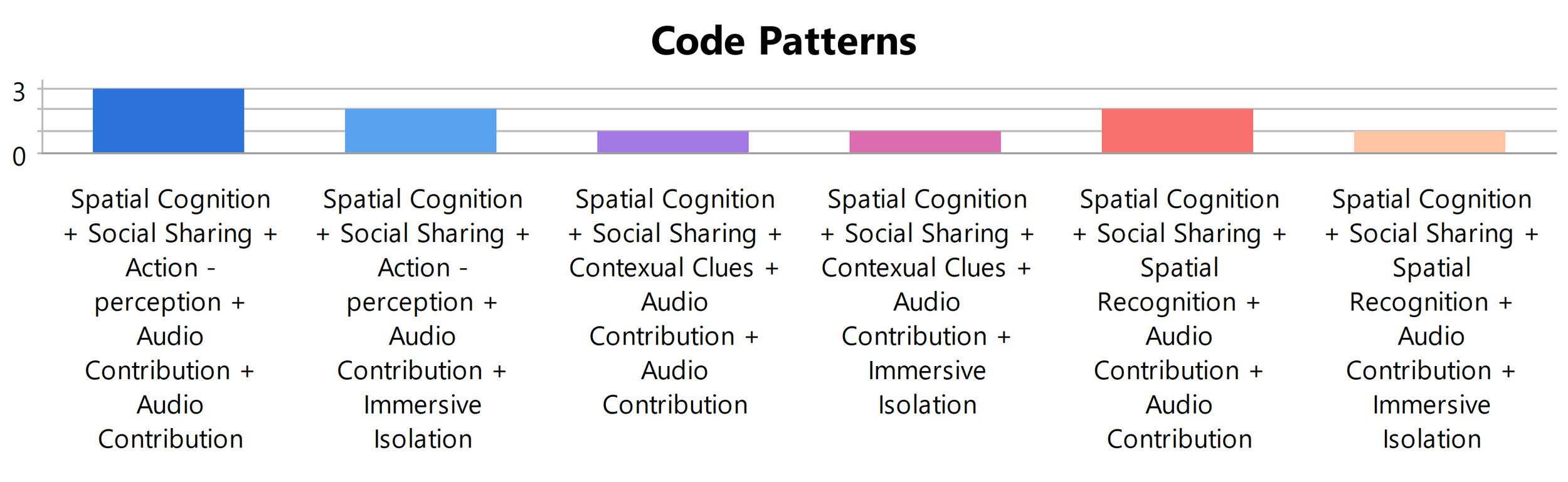

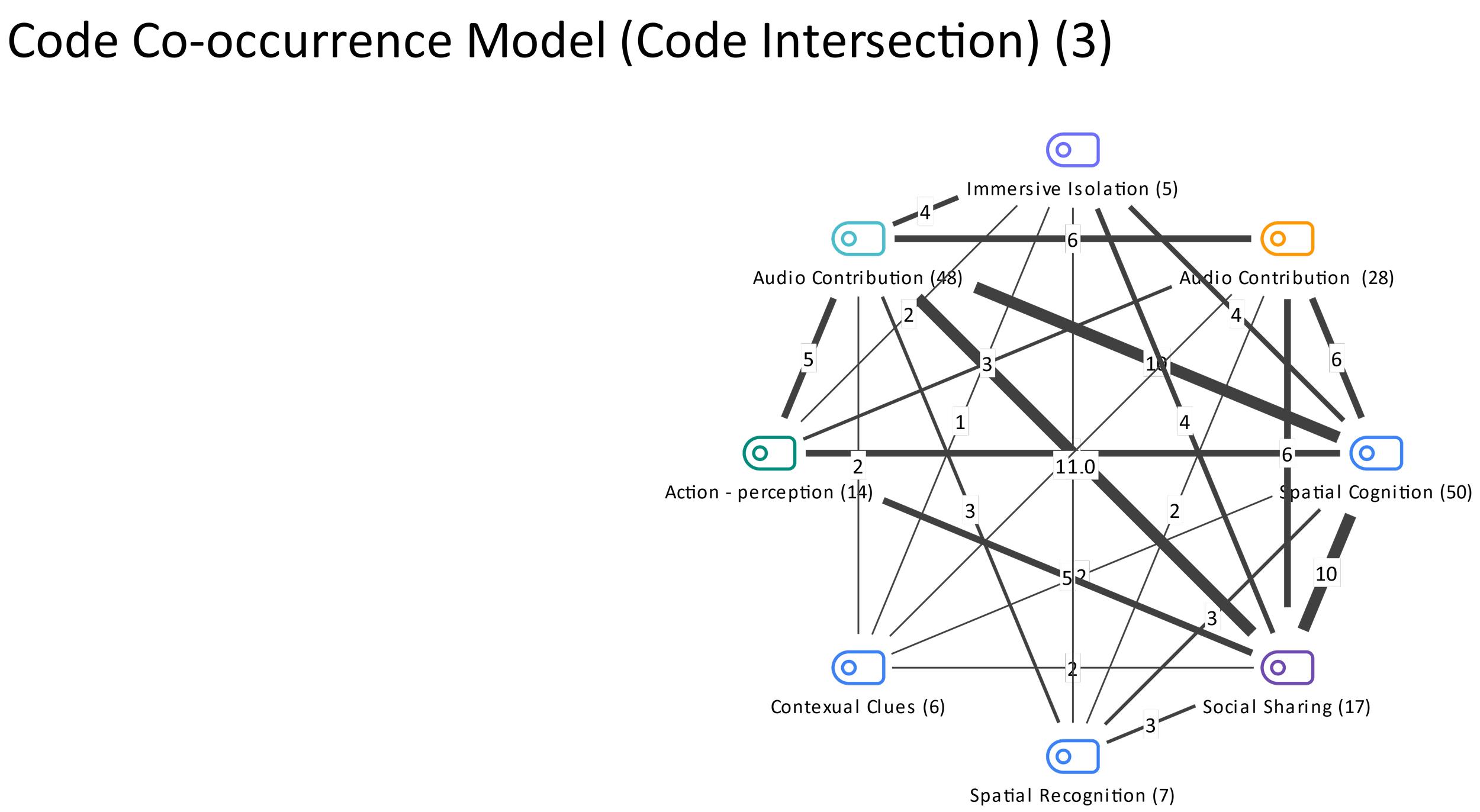

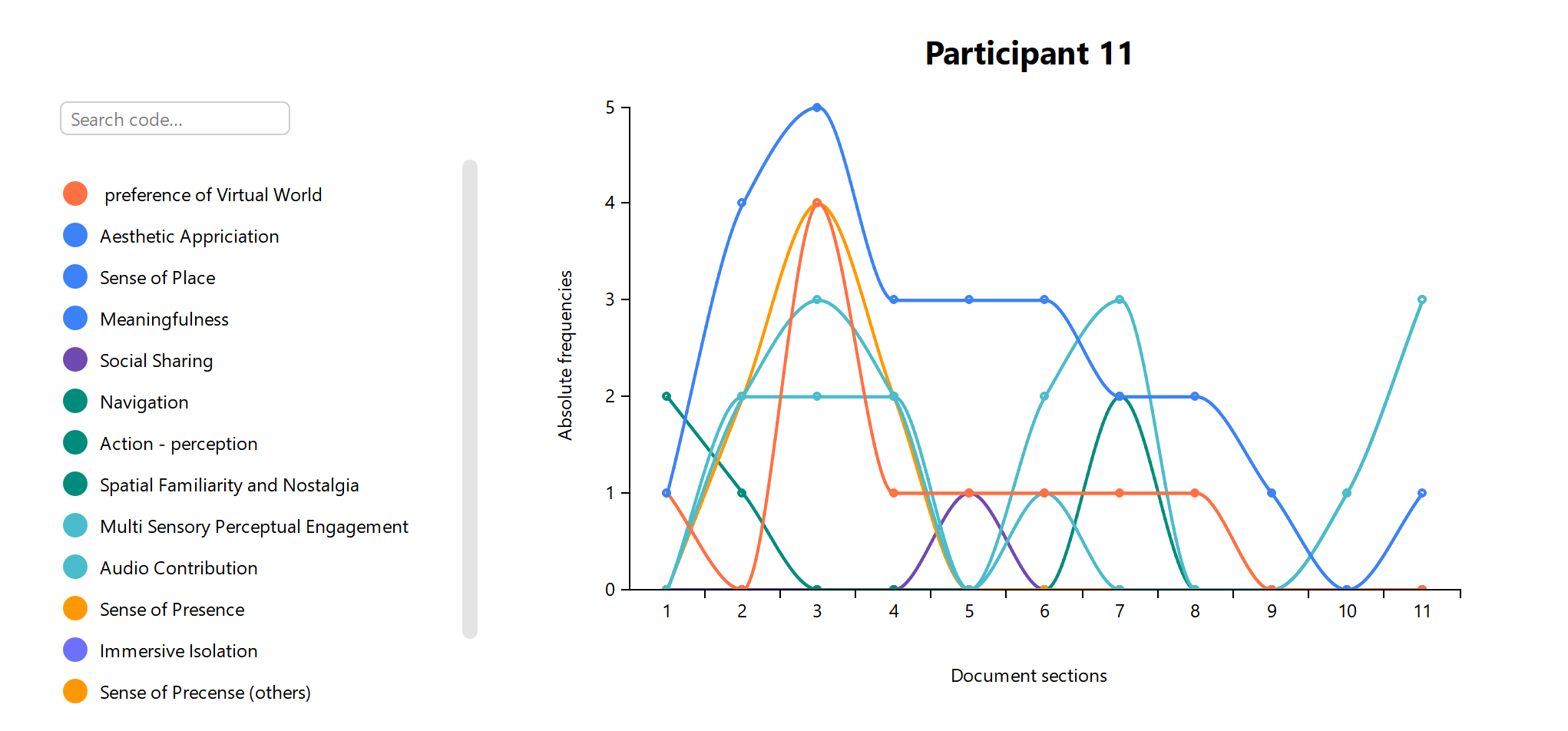

Qualitative Analysis: Conducted in-depth, semi-structured interviews; coded and analyzed with MAXQDA using a grounded theory approach to identify recurring themes and patterns in participant feedback.

-

Identified Core Sensory Cues: Discovered how realistic soundscapes can significantly enhance trust, presence, and the sense of place in virtual environments.

Cross-Disciplinary Insights: Created a bridge between human-computer interaction, environmental psychology, and spatial design, producing a repeatable framework for immersive experience design.

Scalable Application: Findings can inform UX strategy for VR/AR platforms, digital twin environments, and future-ready entertainment and simulation systems.

Human-Centered Design Blueprint: Delivered actionable design principles for crafting virtual worlds that resonate emotionally, not just visually.

Sense of Presence

in Telepresence Communication

Summary

This study explored how to design remote communication systems that go beyond video calls, enabling people to feel each other’s presence across distance. Through iterative prototyping, we tested scenarios such as two individuals watching their favorite sports game from separate locations. We captured their physiological signals (GSR, HRV), translated them into interactive audio and visual feedback, and projected these into each other’s environments.

The research combined mixed-methods UX (interviews, behavioral analysis, journey mapping) with human-centered design to investigate how such multisensory feedback can enhance emotional engagement and strengthen the sense of presence in dematerialized communication spaces.

-

What will remote communication feel like in the post-Zoom era?

This research explored how to create emotional co-presence across distance, enabling two people to feel each other’s reactions in real time while sharing a remote experience, such as watching a favorite sports game together. The challenge was to move beyond flat video calls toward immersive, emotionally intelligent telepresence. -

Prototyping & Iteration: Developed multiple telepresence prototypes using real-time biofeedback as an interaction layer.

Scenario Testing: Two participants watched the same game remotely; physiological data (GSR, HRV) was captured to detect emotional arousal.

Interactive Feedback Loop: Converted biometric data into responsive audio-visual cues projected into the other person’s environment.

Mixed Methods Research: Combined interviews, behavioral observation, and journey mapping to understand emotional impact and UX opportunities.

Cross-Disciplinary Collaboration: Worked with engineers and cognitive scientists to refine prototypes into scalable interaction models.

-

60% Increase in Emotional Engagement: Participants reported stronger connection and immersion compared to standard video calls.

Defined New Telepresence UX Principles: Identified key sensory elements that boost presence, empathy, and shared experience.

Scalable Concept for Post-Zoom Platforms: Research informs future collaboration tools, entertainment streaming, and hybrid work solutions.

Shift in Remote Interaction Paradigm: Demonstrated that emotion-driven biofeedback can make remote communication feel human again.